Eyes that Hear: Enhancing Accessibility with Smart Glasses for the Deaf

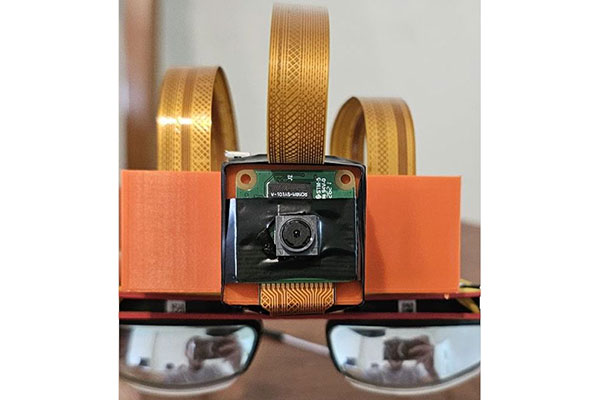

It is extremely difficult for people with disabilities, especially those who have hearing loss, to communicate and participate fully in society. While technological developments have opened up new avenues of opportunity for accessibility, there remains an acute need for resources that will help the hearing-impaired communicate with the public. To facilitate smooth two-way communication and improve learning chances for deaf people, this study aims to present a revolutionary smart solution: Smart AR Glasses. The system has three important modules: first, the Text-to-Speech Translation Module, taking written input from a smartphone into audible speech through the glasses; second, the Speech-to-Text Translation Module, which translates spoken language to text appearing on the glasses; and last, the Object Detection and Learning Module (ODLM), through which the camera on the AR glasses identifies the object being looked at and its name appears on the glasses. ODLM is an organized, level-based learning system designed for kids, which starts off with colors, fruits, and animals. Each level presents different items that show their names whenever detected to let the children learn interactively. This initiative for the empowerment of deaf people through better communication and state-of-the-art learning tools is meant to help them integrate into society with more success. Smart AR Glasses provide an integrated solution for the various challenges faced amongst the hearing-impaired people regarding the different issues while putting together high-level technologies of augmented reality, voice recognition, and machine learning.

Project Details

- Student(s): Ali Assi, Sara El-Kass, Rona Elias

- Advisor(s): Dr. Samer Saab

- Year: 2024-2025

![[photo]](https://soe.lau.edu.lb/images/capstone_ece_24-25_EyesThatHear_pic1.jpg)