Generative Evolutionary-Developmental Pipeline for Emotion-based Music Composition.

Project Details

- Student(s): Paul El Kouba

- Advisor(s): Dr. Joe Tekli | Co-Supervisor: Dr. Ralph Abboud

- Department: Electrical & Computer

- Academic Year(s): 2023-2024

Abstract

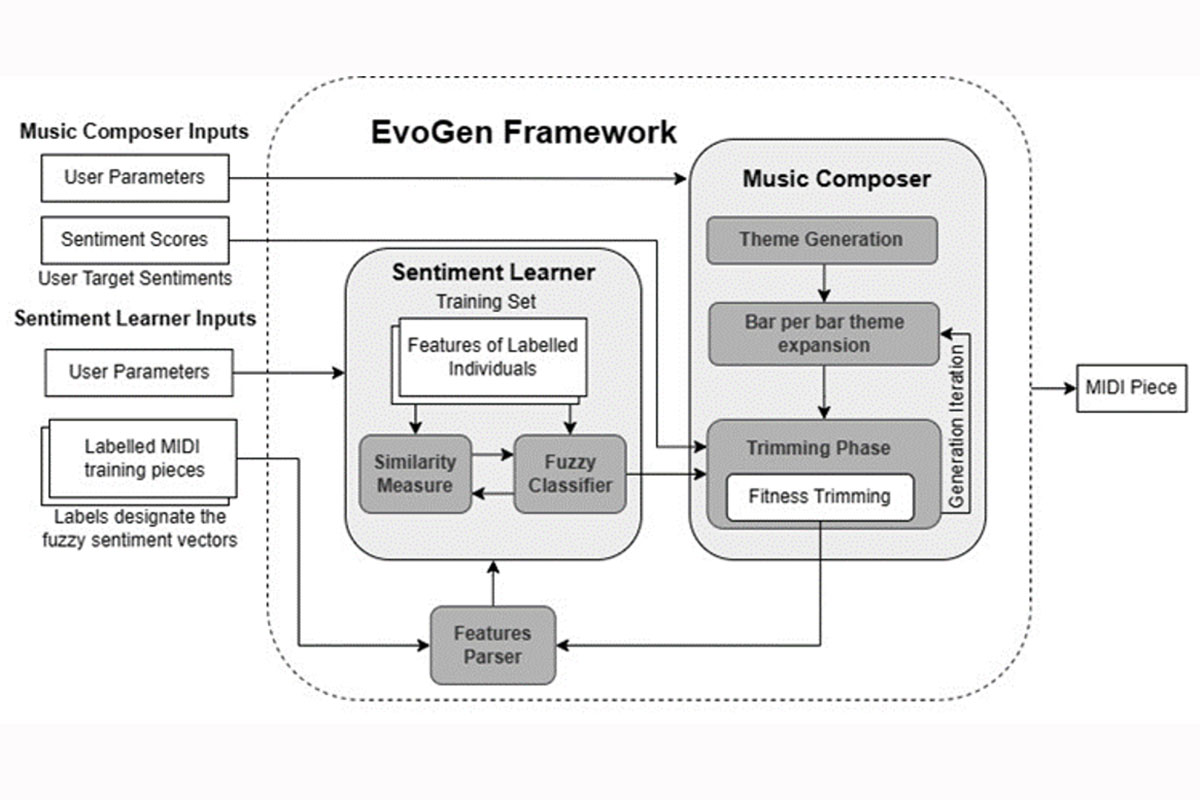

With the progress of Natural Language Processing (NLP) and Artificial Intelligence (AI), many artists have turned to the aforementioned technologies to help them efficiently and effectively compose new music pieces. Most existing music generation solutions consist of unconditioned generative models, which despite generating good quality music, oftentimes fail to convey the desired messages or emotions in the composed pieces. To address this issue, we propose a pipeline that combines the powers of both generative machine learning and evolutionary-developmental models to generate high quality music scores that express a vector of five emotions (i.e., anger, joy, love, sadness, and surprise). The proposed pipeline includes an emotion learner that evaluates the fuzzy emotion scores of the newly generated pieces (e.g., 55% joy, 25% love, and 20% surprise) by analyzing hundreds of symbolic and frequency-based musical features. The emotion learner acts as a fitness function for the evolutionary-developmental pipeline, where a generative music composer generates the music pieces to be evaluated by the emotion learner. The pipeline filters-in the fittest (i.e., most emotionally accurate) pieces to be used to prime the following generation. The process continues iteratively until reaching convergence or a user specified generational threshold. We are currently completing the implementation of the pipeline. We plan to evaluate its effectiveness in performing both music emotion analysis and composition, considering a large benchmark or labelled music pieces and legacy evaluation metrics.