LLM Knowledge Distillation through Task Specific Teacher Prompting

Project Details

- Student(s): Ralph Aouad

- Advisor(s): Dr. Joe Tekli | Co-Supervisor: Mr. Joseph Attiyeh, Ph.D. Student

- Department: Electrical & Computer

- Academic Year(s): 2023-2024

Abstract

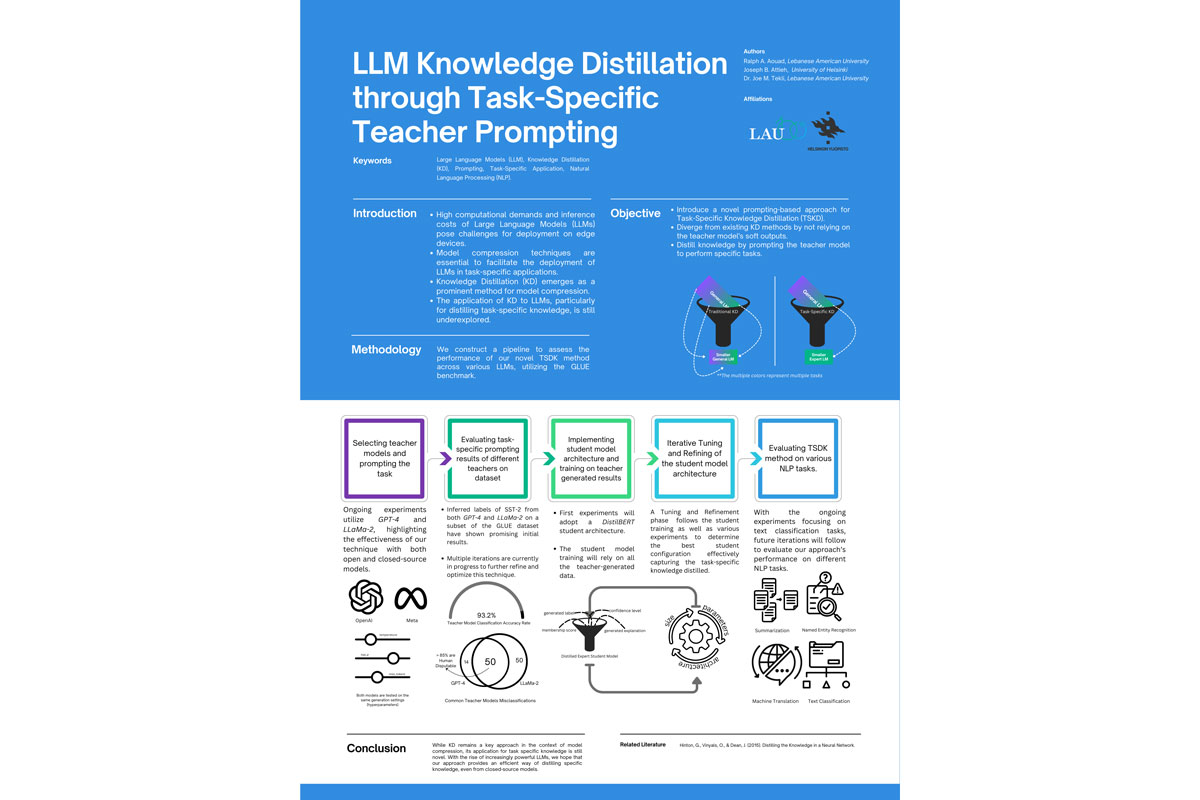

In the era of Large Language Models (LLMs), the high computational demands and inference costs present significant challenges, especially for deployment on edge devices. The necessity for innovations in model compression techniques becomes crucial to replace the use of large general-purpose models for task-specific applications. Knowledge Distillation (KD) stands out as a key approach in this context, yet its application to LLMs remains underexplored, especially regarding the distillation of task-specific knowledge. Our study introduces a novel prompting-based approach for Task-Specific Knowledge Distillation (TSKD). Different from existing solutions which rely on the teacher model’s soft outputs, our method introduces an original approach to distill specific knowledge by prompting the teacher model to perform specific tasks. To validate our solution, we construct a pipeline to assess the performance of our novel TSDK method across various LLMs, utilizing the GLUE benchmark. We present our findings through a series of experiments designed to evaluate the student model’s efficiency and inference time and investigate the impact of changes in their architecture and size on the knowledge transfer process.